One of the things I like about cloud-hosted Kubernetes solutions is that they take the pain out of node management. My latest home lab goal was to replicate some of that functionality with RKE2.

Did I do it? Yes. Is there room for improvement? Of course, its a software project.

The Problem

With RKE1, I have a documented and very manual process for replacing nodes in my clusters. For RKE1, it shapes up like this:

- Provision a new VM.

- Add a DNS Entry for the new VM.

- Edit the

cluster.ymlfile for that cluster, adding the new VM with the appropriate roles to match the outgoing node. - Run

rke up - Edit the

cluster.ymlfile for that cluster to remove the old VM. - Run

rke up - Modify the cluster’s ingress-nginx settings, adding the new external IP and removing the old one.

- Modify my reverse proxy to reflect the IP Changes

- Delete the old VM and its DNS entries.

Repeat the above process for every node in the cluster. Additionally, because the nodes could have slightly different docker versions or updates, I often found myself provisioning a whole set of VMs at a time and going through this process for all the existing nodes at once. The process was fraught with problems, not the least of which is me remembering things that I had to do.

A DNS Solution

I wrote a wrapper API to manage Windows DNS settings, and built calls to that wrapper into my Unifi Controller API so that, when I provision a new machine or remove an old one, it will add or remove the fixed IP from Unifi AND add or remove the appropriate DNS record for the machine.

Since I made DNS entries easier to manage, I also came up with a DNS naming scheme to help manage cluster traffic:

- Every control plane node gets an A record with

cp-<cluster name>.gerega.net. This lets my kubeconfig files remain unchanged, and traffic is distributed across the control plane nodes via round robin DNS. - Every node gets an A record with

tfx-<cluster name>.gerega.net. This allows me to configure my external reverse proxy to use this hostname instead of an individual IP list. See below for more on this from a reverse proxy perspective.

That solved most of my DNS problems, but I still had issues with the various rke up runs and compatibility worries.

Automating with RKE2

The provisioning process for RKE2 is much simpler than that for RKE1. I was able to shift the cluster configuration into the Packer provisioning scripts, which allowed me to do more within the associated Powershell scripts. This, coupled with the DNS standards above, mean that I could run one script and end up with a completely provisioned RKE2 cluster.

I quickly realized that adding and removing clusters to/from the RKE2 clusters was equally easy. Adding nodes to the cluster simply meant provisioning a new VM with the appropriate scripting to install RKE2 and add it to the existing control plane. Removing nodes from the cluster was simple:

- Drain the node (

kubectl drain) - Delete the node from the cluster (

kubectl delete node/<node name>. - Delete the VM (and its associated DNS).

As long as I had at least one node with the server role running at all times, things worked fine.

With RKE2, though, I decided to abandon my ingress-nginx installations in favor of using RKE2’s built-in Nginx Ingress. This allows me to skip managing the cluster’s external IPs, as the RKE cluster’s installer handles that for me.

Proxying with Nginx

A little over a year ago I posted my updated network diagram, which introduced a hardware proxy in the form of a Raspberry Pi running Nginx. That little box is a workhorse, and plans are in place for a much needed upgrade. However, in the mean time, it works.

My configuration was heavily IP based: I would configure upstream blocks with each cluster node’s IP set, and then my sites would be configured to proxy to those IPs. Think something like this:

upstream cluster1 {

server 10.1.2.50:80;

server 10.1.2.51:80;

server 10.1.2.52:80;

}

server {

## server settings

location / {

proxy_pass http://cluster1;

# proxy settings

}

}The issue here is, every time I add or remove a cluster node, I have to mess with this file. My DNS server is setup for round robin DNS, which means I should be able to add new A records with the same host name, and the DNS will cycle through the different servers.

My worry, though, was the Nginx reverse proxy. If I configure the reverse proxy to a single DNS, will it cache that IP? Nothing to do but test, right? So I changed my configuration as follows:

upstream cluster1 {

server tfx-cluster1.gerega.net:80;

}

server {

## server settings

location / {

proxy_pass http://cluster1;

# proxy settings

}

}Everything seemed to work, but how can I know it worked? For that, I dug into my Prometheus metrics.

Finding where my traffic is going

I spent a bit of time trying to figure out which metrics made the most sense to see the number of requests coming through each Nginx controller. As luck would have it, I always put a ServiceMonitor on my Nginx applications to make sure Prometheus is collecting data.

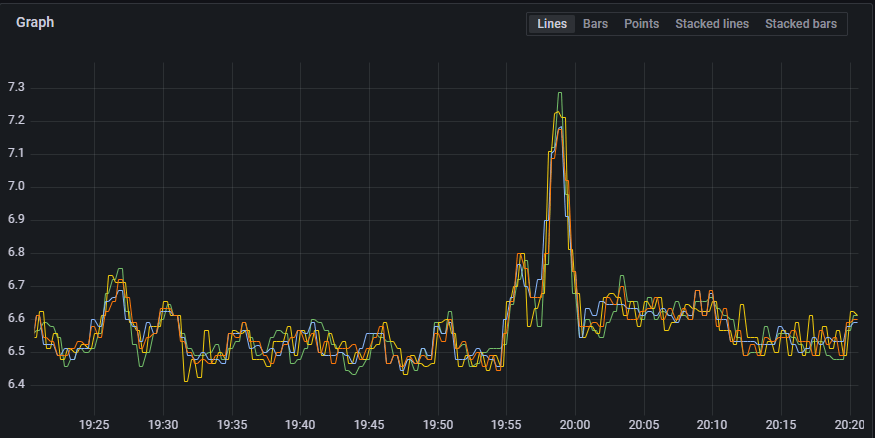

I dug around in the in the Nginx metrics and found nginx_ingress_controller_requests. With some experimentation, I found this query:

sum(rate(nginx_ingress_controller_requests{cluster="internal"}[2m])) by (instance)Looks easy, right? Basically, look at the sum of the rate of incoming requests by instance for a given time. Now, I could clean this up a little and add some rounding and such, but I really did not care about the number: I wanted to make sure that the request across the instances were balanced effectively. I was not disappointed:

Each line is an Nginx controller pod in my internal cluster. Visually, things look to be balanced quite nicely!

Yet Another Migration

With the move to RKE2, I made more work for myself: I need to migrate my clusters from RKE1 to RKE2. With Argo, the migration should be pretty easy, but still, more home lab work.

I also came out of this with a laundry list of tech tips and other long form posts… I will be busy over the next few weeks.