I got all the things I needed to diagnose my BananaPi M5 issues. And I took a very long, windy road to a very simple solution. But I learned an awful lot in the process.

Reconstructing the BananaPi M5

I got tired of poking around the BananaPi M5, and decided I wanted to start from scratch. The boot order of the BananaPi means that, in order to format the EMMC and start from scratch, I needed some hardware.

I ordered a USB to Serial debug cable so that I could connect to the BananaPi (BPi from here on out), interrupt the boot sequence, and use uboot to wipe the disk (or at least the MBR). That would force the BPi to use the SD as a boot drive. From there, I would follow the same steps I did in provisioning the BPi the first time around.

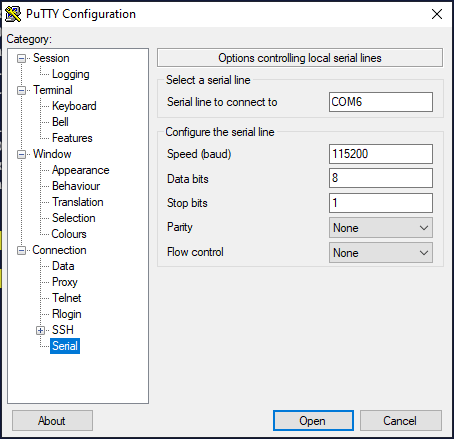

For reference, with the cable I bought, I was able to connect the debug using Putty with the following settings:

Your COM port will probably be different: open the Device Manager to find yours.

I also had to be a little careful about wiring: When I first hooked it up, I connected the transmit cable (white) to the Tx pin, and the receive cable (green) to the Rx pin. That gave me nothing. Then I realized that I had to swap the pins: The transmit cable (white) goes to the Rx pin, and the receive cable (green) goes to the Tx pin. Once swapped, the terminal lit up.

I hit the reset button on the BPi, and as soon as I could, I hit Ctrl-C. This took me into the uboot console. I then followed these steps to erase the first 1000 blocks. From there, I had a “cleanish” BPi. To fully wipe the EMMC, I booted an SD card that had the BPI Ubuntu image, and wiped the entire disk:

dd if=/dev/zero of=/dev/mmcblk0 bs=1MWhere /dev/mmcblk0 is the address of the EMMC drive. This writes all zeros to the EMMC, and cleaned it up nicely.

New install, same problem

After following the steps to install Ubuntu 20.04 to the EMMC, I did an apt upgrade and a do-release-upgrade to get up to 22.04.3. And the SAME network issue reared its ugly head. Back at it with fresh eyes, I determined that something changed in the network configuration, and the cloud-init setup that had worked for this particular BPI image is no longer valid.

What were the symptoms? I combed through logs, but the easiest identifier was, when running networkctl, eth0 was reporting as unmanaged.

So, I did two things: First, disable the network configuration in cloud-init by changing /etc/cloud/cloud.cfg.d/99-fake_cloud.cfg to the following:

datasource_list: [ NoCloud, None ]

datasource:

NoCloud:

fs_label: BPI-BOOT

network: { config : disable }Second, configure netplan by editing /etc/netplan/50-cloud-init.yaml:

network:

ethernets:

eth0:

dhcp4: true

dhcp-identifier: mac

version: 2After that, I ran netplan generate and netplan apply, and the interface now showed as managed when executing networkctl. More importantly, after a reboot, the BPi initialized the network and everything is up and running.

Backup and Scripting

This will be the second proxy I’ve configured in under 2 months, so, well, now is the time to write the steps down and automate if possible.

Before I did anything, I created a bash script to copy important files off of the proxy and onto my NAS. This includes:

- Nginx configuration files

- Custom rsyslog file for sending logs to loki

- Grafana Agent configuration file

- Files for certbot/cloudflare certificate generation

- The backup script itself.

With those files on the NAS, I scripted out restoration of the proxy to the fresh BPi. I will plan a little downtime to make the switch: while the switchover won’t be noticeable to the outside world, some of the internal networking takes a few minutes to swap over, and I would hate to have a streaming show go down in the middle of viewing…. I would certainly take flak for that.