I like to keep my home lab running for both hosting sites (like this one) and experimenting on different tools and techniques. It is pretty nice to be able to spin up some containers in a cluster without fear of disturbing other’s work. And, since I do not hold myself to any SLA commitments, it is typically stress-free.

When I started my Kubernetes learning, having the Rancher UI at my side made it a little easier to see what was going on in the cluster. Sure, I could use kubectl for that, but having a user interface just made things a little nicer while I learned the tricks of kubectl.

The past few upgrades, though, have not gone smoothly. I believe they changed the way they are handling their CRDs, and that has been messing with some of my Argo management of Rancher. But, as I went about researching a fix, I started to think…

Do I really need Rancher?

I came to realize that, well, I do not use Rancher’s UI nearly as often as I had. I rely quite heavily on the Rancher Kubernetes Engine (RKE) command line tool to manage my clusters, but, I do not really use any of the features that Rancher provides. Which features?

- Ranchers RBAC is pretty useless for me considering that I am the only one connecting to the clusters, and I use direct connections (not through Rancher’s APIs).

- Sure, I have single sign-on configured using Azure AD. But I do not think I have logged in to the UI in the last 6 months.

- With my Argo CD setup, I manage resources by storing the definitions in a Git repository. That means I do not use Rancher for the add-on applications for observability and logging.

This last upgrade cycle took the cake. I did my normal steps: upgrade the Rancher Helm version, commit, and let Argo do its thing. That’s when things blew up.

First, the new rancher images are so big they cause an image pull error due to timeouts. So, after manually pulling the images to the nodes, the containers started up. The logs showed them working on the CRDs. The node would get through to the “m’s” of the CRD list before timing out and cycling.

This wreaked havoc on ALL my clusters. I do not know if it was the constant thrashing to the disc or the server CPU, but things just started getting timing out. Once I realized Rancher was thrashing, and, well, I did not feel like fixing it, I started the process of deleting it.

Removing Rancher

After uninstalling the Rancher Helm chart, I ran the Rancher cleanup job that they provide. Even though I am using the Bitnami kube-prometheus chart to install Prometheus on each cluster, the cleanup job deleted all of my ServiceMonitor instances in each cluster. I had to go into Argo and re-sync the applications that were not set to auto-sync in order to recreate the ServiceMonitor instances.

Benchmarking Before and After

With Mimir in place, I had collected metrics from those clusters in a single place from both before and after the change. That made creating a few Grafana dashboard panels a lot easier.

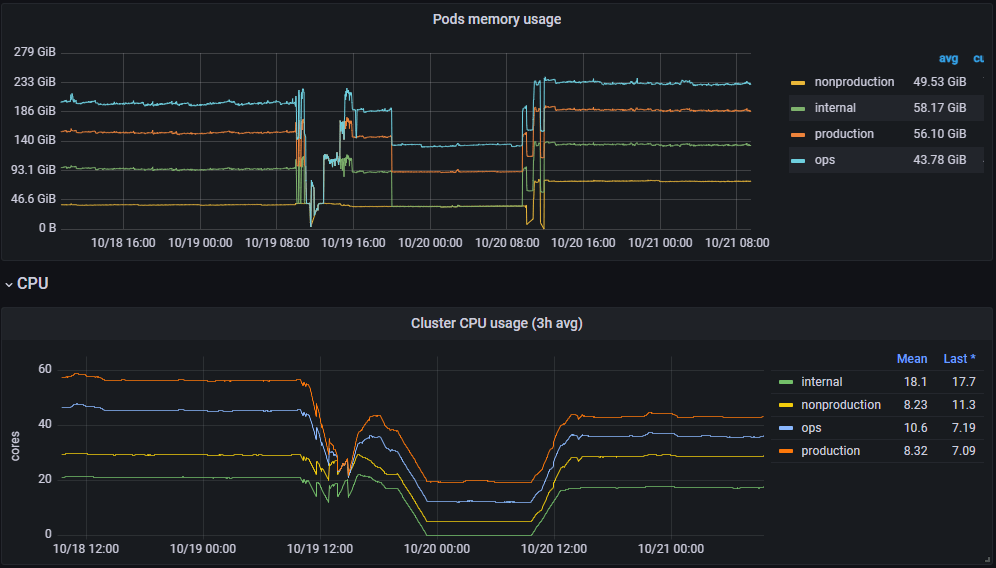

The absolute craziness that started around 10:00 EDT on 10/19 and ended around 10:00 EDT on 10/20 can be attributed to me trying to upgrade Rancher, failing, and then starting to delete Rancher from the clusters. We will ignore that time period, as it is caused by human error.

Looking at the CPU usage first, there were some unexpected behaviors. I expected a drop in the ops cluster, which was running the Rancher UI, but the drop in CPU in the production cluster was larger than that of the internal cluster. Meanwhile, nonproduction went up, which is really strange. I may dig into those numbers a bit more by individual pod later.

What struck me more, was that, across all clusters, memory usage went up. I have no immediate answers to that one, but, you can be sure I will be digging in a bit more to figure that out.

So, while Rancher was certainly using some CPU, it would seem that I am seeing increased memory usage without it. When I have answers to that, you’ll have answers to that.